TypeScript’s Migration to Modules

One of the most impactful things we’ve worked on in TypeScript 5.0 isn’t a feature, a bug fix, or a data structure optimization. Instead, it’s an infrastructure change.

In TypeScript 5.0, we restructured our entire codebase to use ECMAScript modules, and switched to a newer emit target.

What to Know

Now, before we dive in, we want to set expectations. It’s good to know what this does and doesn’t mean for TypeScript 5.0.

As a general user of TypeScript, you’ll need to be running Node.js 12 at a minimum.

npm installs should go a little faster and take up less space, since the typescript package size should be reduced by about 46%.

Running TypeScript will get a nice bit faster – typically cutting down build times of anywhere between 10%-25%.

As an API consumer of TypeScript, you’ll likely be unaffected.

TypeScript won’t be shipping its API as ES modules yet, and will still provide a CommonJS-authored API.

That means existing build scripts will still work.

If you rely on TypeScript’s typescriptServices.js and typescriptServices.d.ts files, you’ll be able to rely on typescript.js/typescript.d.ts instead.

If you’re importing protocol.d.ts, you can switch to tsserverlibrary.d.ts and leverage ts.server.protocol.

Finally, as a contributor of TypeScript, your life will likely become a lot easier. Build times will be a lot faster, incremental check times should be faster, and you’ll have a more familiar authoring format if you already write TypeScript code outside of our compiler.

Some Background

Now that might sound surprising – modules?

Like, files with imports and exports?

Isn’t almost all modern JavaScript and TypeScript using modules?

Exactly! But the current TypeScript codebase predates ECMAScript’s modules – our last rewrite started in 2014, and modules were standardized in 2015. We didn’t know how (in)compatible they’d be with other module systems like CommonJS, and to be frank, there wasn’t a huge benefit for us at the time for authoring in modules.

Instead, TypeScript leveraged namespaces – formerly called internal modules.

Namespaces had a few useful features. For example, their scopes could merge across files, meaning it was easy to break up a project across files and expose it cleanly as a single variable.

// parser.ts

namespace ts {

export function createSourceFile(/*...*/) {

/*...*/

}

}

// program.ts

namespace ts {

export function createProgram(/*...*/) {

/*...*/

}

}

// user.ts

// Can easily access both functions from 'ts'.

const sourceFile = ts.createSourceFile(/*...*/);

const program = ts.createProgram(/*...*/);

It was also easy for us to reference exports across files at a time when auto-import didn’t exist.

Code in the same namespace could access each other’s exports without needing to write import statements.

// parser.ts

namespace ts {

export function createSourceFile(/*...*/) {

/*...*/

}

}

// program.ts

namespace ts {

export function createProgram(/*...*/) {

// We can reference 'createSourceFile' without writing

// 'ts.createSourceFile' or writing any sort of 'import'.

let file = createSourceFile(/*...*/);

}

}

In retrospect, these features from namespaces made it difficult for other tools to support TypeScript; however, they were very useful for our codebase.

Fast-forward several years, and we were starting to feel more of the downsides of namespaces.

Issues with Namespaces

TypeScript is written in TypeScript. This occasionally surprises people, but it’s a common practice for compilers to be written in the language they compile. Doing this really helps us understand the experience we’re shipping to other JavaScript and TypeScript developers. The jargon-y way to say this is: we bootstrap the TypeScript compiler so that we can dog-food it.

Most modern JavaScript and TypeScript code is authored using modules. By using namespaces, we weren’t using TypeScript the way most of our users are. So many of our features are focused around using modules, but we weren’t using them ourselves. So we had two issues here: we weren’t just missing out on these features – we were missing a ton of the experience in using those features.

For example, TypeScript supports an incremental mode for builds.

It’s a great way to speed up consecutive builds, but it’s effectively useless in a codebase structured with namespaces.

The compiler can only effectively do incremental builds across modules, but our namespaces just sat within the global scope (which is usually where namespaces will reside).

So we were hurting our ability to iterate on TypeScript itself, along with properly testing out our incremental mode on our own codebase.

This goes deeper than compiler features – experiences like error messages and editor scenarios are built around modules too. Auto-import completions and the "Organize Imports" command are two widely used editor features that TypeScript powers, and we weren’t relying on them at all.

Runtime Performance Issues with Namespaces

Some of the issues with namespaces are more subtle. Up until now, most of the issues with namespaces might have sounded like pure infrastructure issues – but namespaces also have a runtime performance impact.

First, let’s take a look at our earlier example:

// parser.ts

namespace ts {

export function createSourceFile(/*...*/) {

/*...*/

}

}

// program.ts

namespace ts {

export function createProgram(/*...*/) {

createSourceFile(/*...*/);

}

}

Those files will be rewritten to something like the following JavaScript code:

// parser.js

var ts;

(function (ts) {

function createSourceFile(/*...*/) {

/*...*/

}

ts.createSourceFile = createSourceFile;

})(ts || (ts = {}));

// program.js

(function (ts) {

function createProgram(/*...*/) {

ts.createSourceFile(/*...*/);

}

ts.createProgram = createProgram;

})(ts || (ts = {}));

The first thing to notice is that each namespace is wrapped in an IIFE.

Each occurrence of a ts namespace has the same setup/teardown that’s repeated over and over again – which in theory could be optimized away when producing a final output file.

The second, more subtle, and more significant issue is that our reference to createSourceFile had to be rewritten to ts.createSourceFile.

Recall that this was actually something we liked – it made it easy to reference exports across files.

However, there is a runtime cost.

Unfortunately, there are very few zero-cost abstractions in JavaScript, and invoking a method off of an object is more costly than directly invoking a function that’s in scope.

So running something like ts.createSourceFile is more costly than createSourceFile.

The performance difference between these operations is usually negligible. Or at least, it’s negligible until you’re writing a compiler, where these operations occur millions of times over millions of nodes. We realized this was a huge opportunity for us to improve a few years ago when Evan Wallace pointed out this overhead on our issue tracker.

But namespaces aren’t the only construct that can run into this issue – the way most bundlers emulate scopes hits the same problem. For example, consider if the TypeScript compiler were structured using modules like the following:

// parser.ts

export function createSourceFile(/*...*/) {

/*...*/

}

// program.ts

import { createSourceFile } from "./parser";

export function createProgram(/*...*/) {

createSourceFile(/*...*/);

}

A naive bundler might always create a function to establish scope for every module, and place exports on a single object. It might look something like the following:

// Runtime helpers for bundle:

function register(moduleName, module) { /*...*/ }

function customRequire(moduleName) { /*...*/ }

// Bundled code:

register("parser", function (exports, require) {

exports.createSourceFile = function createSourceFile(/*...*/) {

/*...*/

};

});

register("program", function (exports, require) {

var parser = require("parser");

exports.createProgram = function createProgram(/*...*/) {

parser.createSourceFile(/*...*/);

};

});

var parser = customRequire("parser");

var program = customRequire("program");

module.exports = {

createSourceFile: parser.createSourceFile,

createProgram: program.createProgram,

};

Each reference of createSourceFile now has to go through parser.createSourceFile, which would still have more runtime overhead compared to if createSourceFile was declared locally.

This is partially necessary to emulate the "live binding" behavior of ECMAScript modules – if someone modifies createSourceFile within parser.ts, it will be reflected in program.ts as well.

In fact, the JavaScript output here can get even worse, as re-exports often need to be defined in terms of getters – and the same is true for every intermediate re-export too!

But for our purposes, let’s just pretend bundlers always write properties and not getters.

So if bundled modules can also run into these issues, why did we even mention the issues around boilerplate and indirection with namespaces?

Well because the ecosystem around modules is rich, and bundlers have gotten surprisingly good at optimizing some of this indirection away! A growing number of bundling tools are able to not just aggregate multiple modules into one file, but they’re able to perform something called scope hoisting. Scope hoisting attempts to move as much code as possible into the fewest possible shared scopes. So a bundler which performs scope-hoisting might be able to rewrite the above as

function createSourceFile(/*...*/) {

/*...*/

}

function createProgram(/*...*/) {

createSourceFile(/*...*/);

}

module.exports = {

createSourceFile,

createProgram,

};

Putting these declarations in the same scope is typically a win simply because it avoids adding boilerplate code to simulate scopes in a single file – lots of those scope setups and teardowns can be completely eliminated. But because scope hoisting colocates declarations, it also makes it easier for engines to optimize our uses of different functions.

So moving to modules was not just an opportunity to build empathy and iterate more easily – it was a chance for us to make things faster!

The Migration

Unfortunately there’s not a clear 1:1 translation for every codebase using namespaces to modules.

We had some specific ideas of what we wanted our codebase to look like with modules. We definitely wanted to avoid too much disruption to the codebase stylistically, and didn’t want to run into too many "gotchas" through auto-imports. At the same time, our codebase had implicit cycles and that presented its own set of issues.

To perform the migration, we worked on some tooling specific to our repository which we nicknamed the "typeformer". While early versions used the TypeScript API directly, the most up-to-date version used David Sherret‘s fantastic ts-morph library.

Part of the approach that made this migration tenable was to break each transformation into its own step and its own commit. That made it easier to iterate on specific steps without having to worry about trivial but invasive differences like changes in indentation. Each time we saw something that was "wrong" in the transformation, we could iterate.

A small (see: very annoying) snag on this transformation was how exports across modules are implicitly resolved. This created some implicit cycles that were not always obvious, and which we didn’t really want to reason about immediately.

But we were in luck – TypeScript’s API needed to be preserved through something called a "barrel" module – a single module that re-exports all the stuff from every other module. We took advantage of that and applied an "if it ain’t broke, don’t fix it (for now)" approach when we generated imports. In other words, in cases where we couldn’t create direct imports from each module, the typeformer simply generated imports from that barrel module.

// program.ts

import { createSourceFile } from "./_namespaces/ts"; // <- not directly importing from './parser'.

We figured eventually, we could (and thanks to a proposed change from Oleksandr Tarasiuk, we will), switch to direct imports across files.

Picking a Bundler

There are some phenomenal new bundlers out there – so we thought about our requirements. We wanted something that

- supported different module formats (e.g. CommonJS, ESM, hacky IIFEs that conditionally set globals…)

- provided good scope hoisting and tree shaking support

- was easy to configure

- was fast

There are several options here that might have been equally good; but in the end we went with esbuild and have been pretty happy with it! We were struck with how fast it enabled our ability to iterate, and how quickly any issues we ran into were addressed. Kudos to Evan Wallace on not just helping uncover some nice performance wins, but also making such a stellar tool.

Bundling and Compiling

Adopting esbuild presented a sort of weird question though – should the bundler operate on TypeScript’s output, or directly on our TypeScript source files?

In other words, should TypeScript transform its .ts files and emit a series of .js files that esbuild will subsequently bundle?

Or should esbuild compile and bundle our .ts files?

The way most people use bundlers these days is the latter. It avoids coordinating extra build steps, intermediate artifacts on disk for each step, and just tends to be faster.

On top of that, esbuild supports a feature most other bundlers don’t – const enum inlining.

This inlining provides a crucial performance boost when traversing our data structures, and until recently the only major tool that supported it was the TypeScript compiler itself.

So esbuild made building directly from our input files truly possible with no runtime compromises.

But TypeScript is also a compiler, and we need to test our own behavior! The TypeScript compiler needs to be able to compile the TypeScript compiler and produce reasonable results, right?

So while adding a bundler was helping us actually experience what we were shipping to our users, we were at risk of losing what it’s like to downlevel-compile ourselves and quickly see if everything still works.

We ended up with a compromise.

When running in CI, TypeScript will also be run as unbundled CommonJS emitted by tsc.

This ensures that TypeScript can still be bootstrapped, and can produce a valid working version of the compiler that passes our test suite.

For local development, running tests still requires a full type-check from TypeScript by default, with compilation from esbuild.

This is partially necessary to run certain tests.

For example, we store a "baseline" or "snapshot" of TypeScript’s declaration files.

Whenever our public API changes, we have to check the new .d.ts file against the baseline to see what’s changed; but producing declaration files requires running TypeScript anyway.

But that’s just the default. We can now easily run and debug tests without a full type-check from TypeScript if we really want. So transforming JavaScript and type-checking have been decoupled for us, and can run independently if we need.

Preserving Our API and Bundling Our Declaration Files

As previously mentioned, one upside of using namespaces was that to create our output files, we could just concatenate our input files together.

But, this also applies to our output .d.ts files as well.

Given the earlier example:

// src/compiler/parser.ts

namespace ts {

export function createSourceFile(/*...*/) {

/*...*/

}

}

// src/compiler/program.ts

namespace ts {

export function createProgram(/*...*/) {

createSourceFile(); /*...*/

}

}

Our original build system would produce a single output .js and .d.ts file.

The file tsserverlibrary.d.ts might look like this:

namespace ts {

function createSourceFile(/*...*/): /* ...*/;

}

namespace ts {

function createProgram(/*...*/): /* ...*/;

}

When multiple namespaces exist in the same scope, they undergo something called declaration merging, where all their exports merge together.

So these namespaces formed a single final ts namespace and everything just worked.

TypeScript’s API did have a few "nested" namespaces which we had to maintain during our migration.

One input file required to create tsserverlibrary.js looked like this:

// src/server/protocol.ts

namespace ts.server.protocol {

export type Request = /*...*/;

}

Which, as an aside and refresher, is the same as writing this:

// src/server/protocol.ts

namespace ts {

export namespace server {

export namespace protocol {

export type Request = /*...*/;

}

}

}

and it would be tacked onto the bottom of tsserverlibrary.d.ts:

namespace ts {

function createSourceFile(/*...*/): /* ...*/;

}

namespace ts {

function createProgram(/*...*/): /* ...*/;

}

namespace ts.server.protocol {

type Request = /*...*/;

}

and declaration merging would still work fine.

In a post-namespaces world, we wanted to preserve the same API while using solely modules – and our declaration files had to be able to model this as well.

To keep things working, each namespace in our public API was modeled by a single file which re-exported everything from individual smaller files. These are often called "barrel modules" because they… uh… re-package everything in… a… barrel?

We’re not sure.

Anyway! The way that we maintained the same public API was by using something like the following:

// COMPILER LAYER

// src/compiler/parser.ts

export function createSourceFile(/*...*/) {

/*...*/

}

// src/compiler/program.ts

import { createSourceFile } from "./_namespaces/ts";

export function createProgram(/*...*/) {

createSourceFile(/*...*/);

}

// src/compiler/_namespaces/ts.ts

export * from "./parser";

export * from "./program";

// SERVER LAYER

// src/server/protocol.ts

export type Request = /*...*/;

// src/server/_namespaces/ts.server.protocol.ts

export * from "../protocol";

// src/server/_namespaces/ts.server.ts

export * as protocol from "./protocol";

// src/server/_namespaces/ts.ts

export * from "../../compiler/_namespaces/ts";

export * as server from "./ts.server";

Here, distinct namespaces in each of our projects were replaced with a barrel module in a folder called _namespaces.

| Namespace | Module Path within Project |

|---|---|

namespace ts |

./_namespaces/ts.ts |

namespace ts.server |

./_namespaces/ts.server.ts |

namespace ts.server.protocol |

./_namespaces/ts.server.protocol.ts |

There is some "needless" indirection, but it provided a reasonable pattern for the modules transition.

Now our .d.ts emit can of course handle this situation – each .ts file would produce a distinct output .d.ts file.

This is what most people writing TypeScript use;

however, our situation has some unique features which make using it as-is challenging:

- Some consumers already rely on the fact that TypeScript’s API is represented in a single

d.tsfile. These consumers include projects which expose the internals of TypeScript’s API (e.g.ts-expose-internals,byots), and projects which bundle/wrap TypeScript (e.g.ts-morph). So keeping things in a single file was desirable. - We export many enums like

SyntaxKindorSymbolFlagsin our public API which are actuallyconst enums. Exposingconst enums is generally A Bad Idea, as downstream TypeScript projects may accidentally assume theseenums’ values never change and inline them. To prevent that from happening, we need to post-process our declarations to remove theconstmodifier. This would be challenging to keep track of over every single output file, so again, we probably want to keep things in a single file. - Some downstream users augment TypeScript’s API, declaring that some of our internals exist; it’d be best to avoid breaking these cases even if they’re not officially supported, so whatever we ship needs to be similar enough to our old output to not cause any surprises.

- We track how our APIs change, and diff between the "old" and "new" APIs on every full test run. Keeping this limited to a single file is desirable.

- Given that each of our JavaScript library entry points are just single files, it really seemed like the most "honest" thing to do would be to ship single declaration files for each of those entry points.

These all point toward one solution: declaration file bundling.

Just like there are many options for bundling JavaScript, there are many options for bundling .d.ts files:

api-extractor, rollup-plugin-dts, tsup, dts-bundle-generator, and so on.

These all satisfy the end requirement of "make a single file", however, the additional requirement to produce a final output which declared our API in namespaces similar to our old output meant that we couldn’t use any of them without a lot of modification.

In the end, we opted to roll our own mini-d.ts bundler suited specifically for our needs.

This script clocks in at about 400 lines of code, naively walking each entry point’s exports recursively and emitting declarations as-is.

Given the previous example, this bundler outputs something like:

namespace ts {

function createSourceFile(/*...*/): /* ...*/;

function createProgram(/*...*/): /* ...*/;

namespace server {

namespace protocol {

type Request = /*...*/;

}

}

}

This output is functionality equivalent to the old namespace-concatenation output, along with the same const enum to enum transformation and @internal removal that our previous output had.

Removing the repetition of namespace ts { } also made the declaration files slightly smaller (~200 KB).

It’s important to note that this bundler is not intended for general use. It naively walks imports and emits declarations as-is, and cannot handle:

-

Unexported Types – if an exported function references an unexported type, TypeScript’s

d.tsemit will still declare the type locally.export function doSomething(obj: Options): void; // Not exported, but used by 'doSomething'! interface Options { // ... }This allows an API to talk about specific types, even if API consumers can’t actually refer to these types by name.

Our bundler cannot emit unexported types, but can detect when it needs to be done, and issues an error indicating that the type must be exported. This is a fine trade-off, since a complete API tends to be more usable.

-

Name Conflicts – two files may separately declare a type named

Info– one which is exported, and the other which is purely local.// foo.ts export interface Info { // ... } export function doFoo(info: Info) { // ... } // bar.ts interface Info { // ... } export function doBar(info: Info) { // ... }This shouldn’t be a problem for a robust declaration bundler. The unexported

Infocould be declared with a new name, and uses could be updated.But our declaration bundler isn’t robust – it doesn’t know how to do that. Its first attempt is to just drop the locally declared type, and keep the exported type. This is very wrong, and it’s subtle because it usually doesn’t trigger any errors!

We made the bundler a little smarter so that it can at least detect when this happens. It now issues an error to fix the ambiguity, which can be done by renaming and exporting the missing type. Thankfully, there were not many examples of this in the TypeScript API, as namespace merging already meant that declarations with the same name across files were merged.

-

Import Qualifiers – occasionally, TypeScript will infer a type that’s not imported locally. In those cases, TypeScript will write that type as something like

import("./types").SomeType. Theseimport(...)qualifiers can’t be left in the output since the paths they refer to don’t exist anymore. Our bundler detects these types, and requires that the code be fixed. Typically, this just means explicitly annotating the function with a type. Bundlers likeapi-extractorcan actually handle this case by rewriting the type reference to point at the correct type.

So while there were some limitations, for us these were all perfectly okay (and even desirable).

Flipping the Switch!

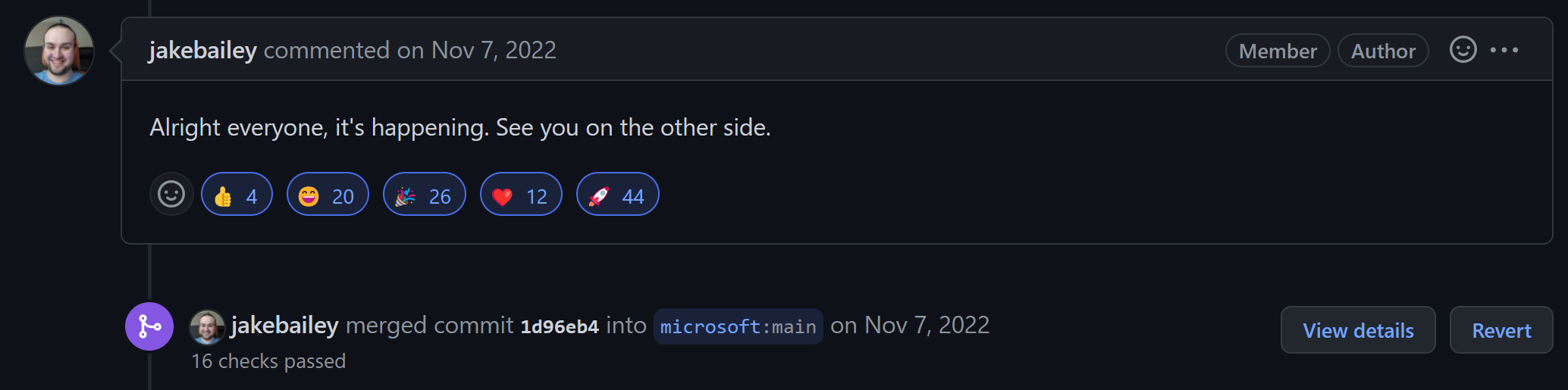

Eventually all these decisions and meticulous planning had to go somewhere! What was years in the making turned into a hefty pull request with over 282,000 lines changed. Plus, the pull request had to be refreshed periodically given that we couldn’t freeze the TypeScript codebase for a long amount of time. In a sense, we were trying to replace a bridge while our team was still driving on it.

Luckily, the automation of our typeformer could re-construct each step of the migration with a commit, which also helped with review. On top of that, our test suite and all of our external test infrastructure really gave us confidence to make the move.

So finally, we asked our team to take a brief pause from making changes.

We hit that merge button, and just like that, Jake convinced git he was the author of every line in the TypeScript codebase TypeScript was using modules!

Wait, What Was That About Git?

Okay, we’re half joking about that git issue. We do often use git blame to understand where a change came from, and unfortunately by default, git does think that almost every line came from our "Convert the codebase to modules" commit.

Fortunately, git can be configured with blame.ignoreRevsFile to ignore specific commits, and GitHub ignores commits listed in a top-level .git-blame-ignore-revs files by default.

Spring Cleaning

While we were making some of these changes, we looked for opportunities to simplify everything we were shipping.

We found that TypeScript had a few files that truthfully weren’t needed anymore.

lib/typescriptServices.js was the same as lib/typescript.js, and all of lib/protocol.d.ts was basically copied out of lib/tsserverlibrary.d.ts from the ts.server.protocol namespace.

In TypeScript 5.0, we chose to drop these files and recommend using these backward-compatible alternatives. It was nice to shed a few megabytes while knowing we had good workarounds.

Spaces and Minifying?

One nice surprise we found from using esbuild was that on-disk size was reduced by more than we expected. It turns out that a big reason for this is that esbuild uses 2 spaces for indentation in output instead of the 4 spaces that TypeScript uses. When gzipping, the difference is very small; but on disk, we saved a considerable amount.

This did prompt a question of whether we should start performing any minification on our outputs. As tempting as it was, this would complicate our build process, make stack trace analysis harder, and force us to ship with source maps (or find a source map host, kind of like what a symbol server does for debug information).

We decided against minifying (for now). Anyone shipping parts of TypeScript on the web can already minify our outputs (which we do on the TypeScript playground), and gzipping already makes downloads from npm pretty fast. While minifying felt like "low hanging fruit" for an otherwise radical change to our build system, it was just creating more questions than answers. Plus, we have other better ideas for reducing our package size.

Performance Slowdowns?

When we dug a bit deeper, we noticed that while end-to-end compile times had reduced on all of our benchmarks, we had actually slowed down on parsing. So what gives?

We didn’t mention it much, but when we switched to modules, we also switched to a more modern emit target. We switched from ECMAScript 5 to ECMAScript 2018. Using more native syntax meant that we could shed a few bytes in our output, and would have an easier time debugging our code. But it also meant that engines had to perform the exact semantics as mandated by these native constructs.

You might be surprised to learn that let and const – two of the most commonly used features in modern JavaScript – have a little bit of overhead.

That’s right!

let and const variables can’t be referenced before their declarations have been run.

// error! 'x' is referenced in 'f'

// before it's declared!

f();

let x = 10;

function f() {

console.log(x);

}

And in order to enforce this, engines usually insert guards whenever let and const variables are captured by a function.

Every time a function references these variables, those guards have to occur at least once.

When TypeScript targeted ECMAScript 5, these lets and consts were just transformed into vars.

That meant that if a let or const-declared variable was accessed before it was initialized, we wouldn’t get an error.

Instead, its value would just be observed as undefined.

There had been instances where this difference meant that TypeScript’s downlevel-emit wasn’t behaving as per the spec.

When we switched to a newer output target, we ended up fixing a few instances of use-before-declaration – but they were rare.

When we finally flipped the switch to a more modern output target, we found that engines spent a lot of time performing these checks on let and const.

As an experiment, we tried running Babel on our final bundle to only transform let and const into var.

We found that often 10%-15% of our parse time could be dropped from switching to var everywhere.

This translated to up to 5% of our end-to-end compile time being just these let/const checks!

At the moment, esbuild doesn’t perform provide an option to transform let and const to var.

We could have used Babel here – but we really didn’t want to introduce another step into our build process.

Shu-yu Guo has already been investigating opportunities to eliminate many of these runtime checks with some promising results – but some checks would still need to be run on every function, and we were looking for a win today.

We instead found a compromise. We realized that most major components of our compiler follow a pretty similar pattern where a top-level scope contains a good chunk of state that’s shared by other closures.

export function createScanner(/*...*/) {

let text;

let pos;

let end;

let token;

let tokenFlags;

// ...

let scanner = {

getToken: () => token,

// ...

};

return scanner;

}

The biggest reason we really wanted to use let and const in the first place was because vars have the potential to leak scope out of blocks;

but at the top level scope of a function, there’s way fewer "downsides" to using vars.

So we asked ourselves how much performance we could win back by switching to var in just these contexts.

It turns out that we were able to get rid of most of these runtime checks by doing just that!

So in a few select places in our compiler, we’ve switched to vars, where we turn off our "no var" ESLint rule just for those regions.

The createScanner function from above now looks like this:

export function createScanner(/*...*/) {

// Why var? It avoids TDZ checks in the runtime which can be costly.

// See: https://github.com/microsoft/TypeScript/issues/52924

/* eslint-disable no-var */

var text;

var pos;

var end;

var token;

var tokenFlags;

// ...

let scanner = {

getToken: () => token,

// ...

};

/* eslint-enable no-var */

return scanner;

}

This isn’t something we’d recommend most projects do – at least not without profiling first. But we’re happy we found a reasonable workaround here.

Where’s the ESM?

As we mentioned previously, while TypeScript is now written with modules, the actual JS files we ship have not changed format.

Our libraries still act as CommonJS when executed in a CommonJS environment (module.exports is defined), or declare a top-level var ts otherwise (for <script>).

There’s been a long-standing request for TypeScript to ship as ECMAScript modules (ESM) instead (#32949).

Shipping ECMAScript modules would have many benefits:

- Loading the ESM can be faster than un-bundled CJS if the runtime can load multiple files in parallel (even if they are executed in order).

- Native ESM doesn’t make use of export helpers, so an ESM output can be as fast as a bundled/scope-hoisted output. CJS may need export helpers to simulate live bindings, and in codebases like ours where we have chains of re-exports, this can be slow.

- The package size would be smaller because we’re able to share code between our different entrypoints rather than making individual bundles.

- Those who bundle TypeScript could potentially tree shake parts they aren’t using. This could even help the many users who only need our parser (though our codebase still needs more changes to make that work).

That all sounds great! But we aren’t doing that, so what’s up with that?

The main reason comes down to the current ecosystem. While a lot of packages are adding ESM (or even going ESM only), an even greater portion are still using CommonJS. It’s unlikely we can ship only ESM in the near future, so we have to keep shipping some CommonJS to not leave users behind.

That being said, there is an interesting middle ground…

Shipping ESM Executables (And More?)

Previously, we mentioned that our libraries still act as CommonJS.

But, TypeScript isn’t just a library, it’s also a set of executables, including tsc, tsserver, as well as a few other smaller bundles for automatic type acquisition (ATA), file watching, and cancellation.

The critical observation is that these executables don’t need to be imported;

they’re executables!

Because these don’t need to be imported by anyone (not even https://vscode.dev, which uses tsserverlibrary.js and a custom host implementation), we are free to convert these executables to whatever module format we’d like, so long as the behavior doesn’t change for users invoking these executables.

This means that, so long as we move our minimum Node version to v12.20, we could change tsc, tsserver, and so on, into ESM.

One gotcha is that the path to our executables within our package are "well known";

a surprising number of tools, package.json scripts, editor launch configurations, etc., use hard-coded paths like ./node_modules/typescript/bin/tsc.js or ./node_modules/typescript/lib/tsc.js.

As our package.json doesn’t declare "type": "module", Node assumes those files to be CommonJS, so emitting ESM isn’t enough.

We could try to use "type": "module", but that would add a whole slew of other challenges.

Instead, we’ve been leaning towards just using a dynamic import() call within a CommonJS file to kick off an ESM file that will do the actual work.

In other words, we’d replace tsc.js with a wrapper like this:

// https://en.wikipedia.org/wiki/Fundamental_theorem_of_software_engineering

(() => import("./esm/tsc.mjs"))().catch((e) => {

console.error(e);

process.exit(1);

});

This would not be observable by anyone invoking the tool, and we’re now free to emit ESM.

Then much of the code shared between tsc.js, tsserver.js, typingsInstaller.js, etc. could all be shared!

This would turn out to save another 7 MB in our package, which is great for a change which nobody can observe.

What that ESM would actually look like and how it’s emitted is a different question. The most compatible near-term option would be to use esbuild’s code splitting feature to emit ESM.

Farther down the line, we could even fully convert the TypeScript codebase to a module format like Node16/NodeNext or ES2022/ESNext, and emit ESM directly!

Or, if we still wanted to ship only a few files, we could expose our APIs as ESM files and turn them into a set of entry points for a bundler.

Either way, there’s potential for making the TypeScript package on npm much leaner, but, it would be a much more difficult change.

In any case, we’re absolutely thinking about this for the future; converting the codebase from namespaces to modules was the first big step in moving forward.

API Patching

As we mentioned, one of our goals was to maintain compatibility with TypeScript’s existing API; however, CommonJS modules allowed people to use the TypeScript API in ways we did not anticipate.

In CommonJS, modules are plain objects, providing no default protection from others modifying your library’s internals! So what we found over time was that lots of projects were monkey-patching our APIs! This put us in a tough spot, because even if we wanted to support this patching, it would be (for all intents and purposes) infeasible.

In many cases, we helped some projects move to more appropriate backwards-compatible APIs that we exposed. In other cases, there are still some challenges helping our community move forward – but we’re eager to chat and help project maintainers out!

Accidentally Exported

On a related note, we aimed to keep some "soft-compatibility" around our existing APIs that were necessary due to our use of namespaces.

With namespaces, internal functions had to be exported just so they could be used by different files.

// utilities.ts

namespace ts {

/** @internal */

export function doSomething() {

}

}

// parser.ts

namespace ts {

// ...

let val = doSomething();

}

// checker.ts

namespace ts {

// ...

let otherVal = doSomething();

}

Here, doSomething had to be exported so that it could be accessed from other files.

As a special step in our build, we’d just erase them away from our .d.ts files if they were marked with a comment like /** @internal */, but they’d still be reachable from the outside at run time.

Bundling modules, in contrast, won’t leak the exports of every file’s exports. If an entry point doesn’t re-export a function from another file, it will be copied in as a local.

Technically with TypeScript 5.0, we could have not re-exported every /** @internal */-marked function, and made them "hard-privates".

This seemed unfriendly to projects experimenting with TypeScript’s APIs.

We also would need to start explicitly exporting everything in our public API.

That might be a best-practice, but it was more than we wanted to commit to for 5.0.

We opted to keep our behavior the same in TypeScript 5.0.

How’s the Dog Food?

We claimed earlier that modules would help us empathize more with our users. How true did that end up being?

Well, first off, just consider all the packaging choices and build tool decisions we had to make! Understanding these issues has put us way closer to what other library authors currently experience, and it’s given us a lot of food for thought.

But there were some obvious user experience problems we hit as soon as we switched to modules.

Things like auto-imports and the "Organize Imports" command in our editors occasionally felt "off" and often conflicted with our linter preferences.

We also felt some pain around project references, where toggling flags between a "development" and a "production" build would have required a totally parallel set of tsconfig.json files.

We were surprised we hadn’t received more feedback about these issues from the outside, but we’re happy we caught them.

And the best part is that many of these issues, like respecting case-insensitive import sorting and passing emit-specific flags under --build are already implemented for TypeScript 5.0!

What about project-level incrementality?

It’s not clear if we got the improvements that we were looking for.

Incremental checking from tsc doesn’t happen in under a second or anything like that.

We think part of this might stem from cycles between files in each project.

We also think that because most of our work tends to be on large root files like our shared types, scanner, parser, and checker, it necessitates checking almost every other file in our project.

This is something we’d like to investigate in the future, and hopefully it translates to improvements for everyone.

The Results!

After all these steps, we achieved some great results!

- A 46% reduction in our uncompressed package size on npm

- A 10%-25% speed-up

- Lots of UX improvements

- A more modern codebase

That performance improvement is a bit co-mingled with other performance work we’ve done in TypeScript 5.0 – but a surprising amount of it came from modules and scope-hoisting.

We’re ecstatic about our faster, more modern codebase with its dramatically streamlined build. We hope it makes TypeScript 5.0, and every future release, a joy for you to use.

Happy Hacking!

– Daniel Rosenwasser, Jake Bailey, and the TypeScript Team

Light

Light Dark

Dark

5 comments

While glad to hear there may be performances benefits from this change- it remains striking to me how over-complicated modules and building is in TS/JS. Imports are hideous, an absolute nightmare to manage, and reminiscent of the worst-spaghetti code since they require file paths to work. Even with tooling that tries to help; imports/exports remain the most painful part of TS development- by far.

Project files (a la .csproj) are superior in nearly every respect. In C#, I never have to worry about circular references as the compiler is able to figure it out. I never have to worry about where my files are sitting in the folder structure relative to each other. The fact that even the TS team needs to use the “barrel file” hack should speak volumes.

In C#, `partial` classes are possible because file path has nothing to do with the definition of the type. Compare this to TS, where doing any kind of code generation is extremely painful due to lack of `partial`. Even the TS team’s prior use of namespaces was meant to simulate partial classes.

I’m still eagerly waiting for the day when the JS community “discovers” project files.

Under “Flipping the Switch!”, brilliant! Thanks for confirming how development can progress when major changes are needed.

Please review the first line –this decisions–.

Thanks!

Fixed, thank you!

The filename GitHub supports should say “.git-blame-ignore-revs” (it currently says refs instead of revs: “.git-blame-ignore-refs”)

Fixed, thank you!